Asked and (poorly) answered...

Every time someone who doesn’t understand technology talks about AI they seem to have a similar take…it’s come this far this fast, imagine the next five to ten years!

I would suggest that ten steps towards the wall does not carry with it the certainty of infinite progress you might think. Looking up often helps gain context.

So lets check in with AI once again for a simple test. The prompt is not complicated, and should be something someone who actively uses powershell and Citrix should be able to do in a short amount of time

“Write a powershell script that lists what delivery groups a citrix application is published to and what priority each delivery group is set to.”

Lets see what we get…Gemini, you are up first…we wont be including any superflous post text telling us we need to install the powershell module or reminding us that you can just go use the console to find out the information…lets just look at the code.

# Import the Citrix PowerShell module

Import-Module Citrix*

# Get the application name you want to check

$ApplicationName = "YourApplicationName"

# Get all delivery groups

$DeliveryGroups = Get-BrokerDesktopGroup

# Find the application in each delivery group and get its priority

foreach ($DeliveryGroup in $DeliveryGroups) {

$ApplicationInGroup = Get-BrokerApplication -DeliveryGroupName $DeliveryGroup.Name | Where-Object {$_.Name -eq $ApplicationName}

if ($ApplicationInGroup) {

$Priority = $ApplicationInGroup.AssociatedDesktopGroupPriorities[0]

Write-Host "Application '$ApplicationName' is published to Delivery Group '$($DeliveryGroup.Name)' with priority $Priority"

}

}

First test, first fail…Get-BrokerApplication does not have a parameter -DeliveryGroupName, and even if it did, listing out AssociatedDesktopGroupPriorities is just going to list an array of numbers, which is…not useful…

Google gets to fail twice! Lets just search google for “Citrix cloud powershell list delivery group priority sdk” i couldn’t get it to generate an AI response without the “sdk” bit, so not the exact same prompt, but the closest i could get and still have it generate a AI response and not just web results.

# Import the Citrix PowerShell module

Import-Module Citrix.AD.Broker

# Get all delivery groups and their priorities

$deliveryGroups = Get-BrokerDesktopGroup

# Loop through and display delivery group names and priorities

foreach ($group in $deliveryGroups) {

Write-Output "Delivery Group: $($group.Name), Priority: $($group.Priority)"

}

I don’t even…what is this? Again, the Priority attribute does not exist so this will never work…and the module…but…why?

Just…Add-PSSnapin Citrix* and stop making it weird…

Ok copilot…you’re next…

# Load the Citrix PowerShell snap-in

Add-PSSnapin Citrix*

# Specify the application name

$appName = "YourApplicationName"

# Get the application's associated delivery groups

$app = Get-BrokerApplication -Name $appName

$deliveryGroups = Get-BrokerApplication -Name $appName | Select-Object -ExpandProperty AssociatedDesktopGroupUids

# Iterate through each delivery group and get the priority

foreach ($dgUid in $deliveryGroups) {

$deliveryGroup = Get-BrokerDesktopGroup -Uid $dgUid

$priority = $app.AssociatedDesktopGroupPriorities[$dgUid]

Write-Output "Delivery Group: $($deliveryGroup.Name), Priority: $priority"

}

Closer…but still wrong. It’s trying to index the prority array using the delivery group Uid. So the AssociatedDesktopGroupPriorities is going to contain something like “0,0,1” and the AssociatedDesktopGroupUids is going to containg something like “45,62,78” and if you hit up Get-BrokerDesktopGroup -Uid 45it will give you information about that delivery group. So it’s…wandering nearer the correct direction than Gemini did, but still, utterly useless, if you know how to fix this, you could have written it faster without it. ChatGTP 4o is up next…that is the paid model as of writing.

# Import the Citrix PowerShell snap-in

Add-PSSnapin Citrix.Broker.Admin.V2 -ErrorAction SilentlyContinue

# Define the application name to query

$ApplicationName = "YourApplicationName" # Replace with the name of the application

# Get the application details

$Application = Get-BrokerApplication -Name $ApplicationName

if (-not $Application) {

Write-Error "Application '$ApplicationName' not found."

exit 1

}

# Display application details

Write-Output "Application: $ApplicationName"

# Get the associated delivery groups and their priorities

$AssignedDeliveryGroups = Get-BrokerAccessPolicyRule -ApplicationUid $Application.Uid | `

Select-Object -Property DesktopGroupName, Priority

if ($AssignedDeliveryGroups) {

Write-Output "Delivery Groups and Priorities:"

$AssignedDeliveryGroups | Format-Table -Property DesktopGroupName, Priority -AutoSize

} else {

Write-Output "No delivery groups are associated with this application."

}

Hands down the most code generated, and I DO appreciate an attempt to make pretty output…if only that output…existed…

Three models, three different modules imported to do the job. That isn’t a fault, just funny. The fault however, I mean there are a few, but the showstopper is Get-BrokerAccessPolicyRule

I have no idea why it thinks the access policy rule is where the priority is located, but it isn’t…and again -ApplicationUid is not a valid parameter for the cmdlet…again fixing this would be harder and require as much knowledge as writing it yourself…

I guess my job is safe for another quarter…the super complex code that DOES work btw is this:

Add-PSSnapin citrix*

$uids = Get-BrokerDesktopGroup | select PublishedName,Uid

$application = Get-BrokerApplication -Name "Web\WorkDay"

$i = 0

foreach($item in $application.AllAssociatedDesktopGroupUids) {

Write-Host ("Group: - Priority " -f (($uids | Where-Object{ $_.Uid -eq $item}).PublishedName,$application.AssociatedDesktopGroupPriorities[$i]))

$i++

}

Well, ok, I say works but, there is another unrelated issue but that has to do with the structure of the data. It turns out AllAssociatedDesktopGroupUids and AssociatedDesktopGroupPriorities are not in the same order so, its kind of useless…which, if Ai can’t figure out how to do the base task, it damn sure wont know that is it broken at a structural level…I guess my job is safe for six more months!

Getting Started with VSCode and GitHub

Step 1: Download VSCode and install it.

The settings I personally choose...

Step 2: Configure PowerShell syntax highlighting and VSIcons

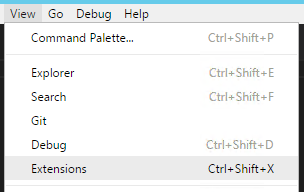

Click View -> Extensions

Click Install next to vscode-icons, this isn't required it is just a really nice touch IMO...

After you click install don't click reload, search for powershell and click Install next to PowerShell (the StackOverflow extension is awesome too!), click Reload when it is done...

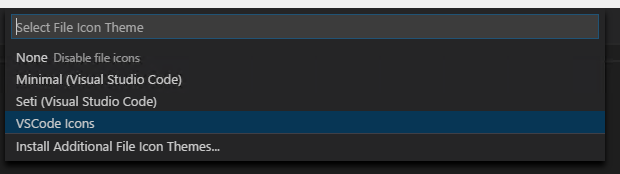

Click File -> Preferences -> File Icon Theme...

Select VSCode Icons from the list...

Step 3: Download and Install Git SCM

For most users simply selecting the default options for the installation is sufficient, there are a lot of questions, but for most, the default it fine...

Step 4: Basic Git Configuration

git config --global user.name "Bob Dole"

git config --global user.email "user@email.com"

Step 5: Create and Pull a GitHub Repository

When you log into GitHub, in the upper right corner you will see a + symbol, click that and choose "New Repository"

Enter a Repository Name, in this case I chose PowerShell-Scripts, description is optional

Launch Git BASH (this was installed as part of Git) and navigate to the local location you want the repository to reside at.

Once there type the following:

git clone <https address of your repository>.git

You will be prompted to log in to GitHub...

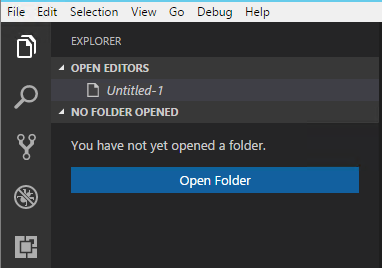

Once you log in you now want to open the folder you just cloned the repository to (the way we did it there should be a sub folder called "PowerShell-Scripts" located at the path you were in when you ran the git clone. Click the Explorer tab on the left hand Activity Bar.

Browse to the folder you cloned the repository to.

Once you have opened this folder click File -> New File

Once the file is open we need to tell VSCode that this is a PowerShell file, if you open a .ps1 file it automatically knows, in the case of a new file we need to tell it, so hit CTRL+SHIFT+P and type "change lang" and hit enter and type "ps" and hit enter, now VSCode knows which Syntax and IntelliSense to use.

You will also see that upon doing that it will open a PowerShell terminal pane below your script pane.

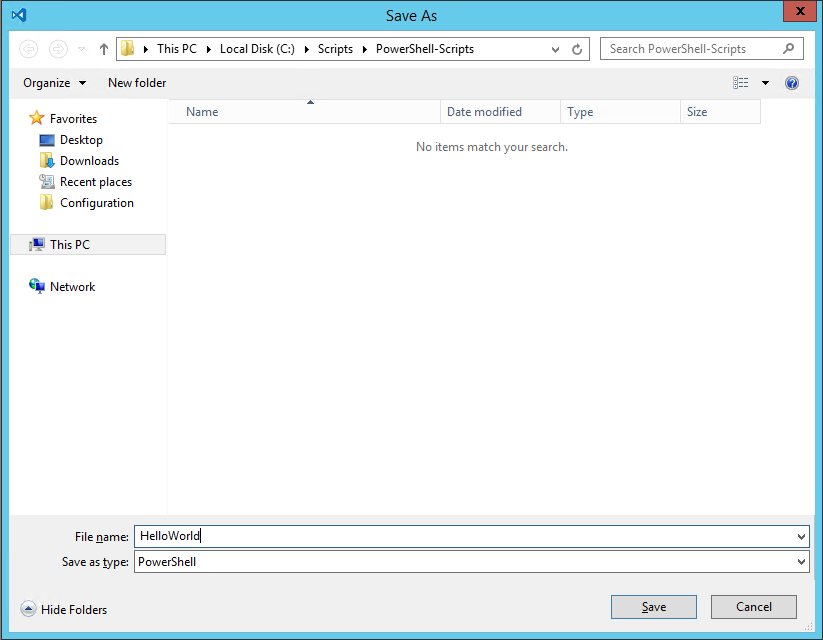

After you type some example code click File -> Save (or hit CTRL+S) and save your file t the folder your repository exists in.

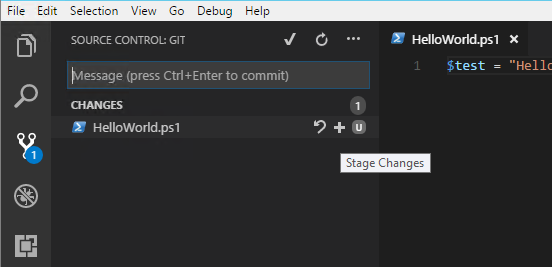

Click on Source Control in the Activity Bar and you shoul dnow see your file there, when you hover over it you should see a + symbol, click that, this stageds your changes you just made.

You should see the "U" change to an "A". Click the Check Mark at the top of the pane.

It will ask you to enter a commit comment, this should be a succinct description of the changes you are committing, in many cases your first commit comment will be "initial commit". When you view the files on GitHub you can see the commit comment associated with the last commit that included that individual file/folder.

Once your commit is complete you need to "Push" your changes up to Git, to do this, on the Source Control pane click the Ellipsis and select "Push", barring any issues the changes will now be pushed up to GitHub available for cloning and syncing with new users or your other development locations.

There you go, changes are now secure, feel free to break your incredibly complex Hello World and you will be able to recover the last working version and collaborate with others on long term scripts requiring multiple developers.

Bundle DSC Waves for Pull Server Distribution

This assumes you have WinRar installed to the default path, this will also delete the source files after it creates the zip files.

After running this script copy the resulting files to the DSC server in the following location: "C:\Program Files\WindowsPowerShell\DscService\Modules"

$modpath = "-path to dsc wave-"

$output = "-path to save wave to-"

[regex]$reg = "([0-9\.]{3,12})"

if((Test-Path $output) -ne $true){ New-Item -Path $output -ItemType Directory -Force }

foreach($module in (Get-ChildItem -Path $modpath)) {

$psd1 = ($module.FullName+"\"+$module+".psd1")

$content = Get-Content $psd1

foreach($line in $content) {

if($line.Contains("ModuleVersion")) {

$outpath = $output+"\"+$module.Name+"_"+($reg.Match($line).Captures)

Write-Host ""

if(Test-Path -Path $outpath) {

Copy-Item -Path $module.FullName -Destination $outpath -Recurse

}else{

New-Item -Path $outpath -ItemType Directory -Force

Copy-Item -Path $module.FullName -Destination $outpath -Recurse

}

& "C:\Program Files\WinRar\winrar.exe" a -afzip -df -ep1 ($outpath+".zip") $outpath

}

}

}

Start-Sleep -Seconds 1

New-DscCheckSum -Path $outputPowerShell DSC: Remote Monitoring Configuration Propagation

So if you are like me you are not really interested in crossing your fingers and hoping your servers are working right. Which is why it is uniquely frustrating that DSC does not have anything resembling a dashboard (not a complaint really, it is early days, but in practical application not knowing something went down is...not really an option unless you like being sloppy).

The way I build my servers is, I have an XML file with a list of servers, their role, and their role GUID. Baked into the master image is a simple bootstrap script that goes and gets the build script, since I'm using DSC the "build" script doesn't really build much, itself mostly just bootstrapping the DSC process. The first script to run is:

$nodeloc = "\\dscserver\DSC\Nodes\nodes.xml"

# Get node information.

try {

[xml]$nodes = Get-Content -Path $nodeloc -ErrorAction 'Stop'

$role = $nodes.hostname.$env:COMPUTERNAME.role

}

catch{ Write-Host "Could not find matching node, exiting.";Break }

# Set correct build script location.

switch($role) {

"XenAppPKG" { $scriptloc = "\\dscserver\DSC\Scripts\pkgbuild.ps1" }

"XenAppQA" { $scriptloc = "\\dscserver\DSC\Scripts\qabuild.ps1" }

"XenAppProd" { $scriptloc = "\\dscserver\DSC\Scripts\prodbuild.ps1" }

}

Write-Host "Script location set to:"$scriptloc

if((Test-Path -Path "C:\scripts") -ne $true){ New-Item -Path "C:\scripts" -ItemType Directory -Force -ErrorAction 'Stop' }

Write-Host "Checking build script availability..."

while((Test-Path -Path $scriptloc) -ne $true){ Start-Sleep -Seconds 15 }

Write-Host "Fetching build script..."

while((Test-Path -Path "C:\scripts\build.ps1") -ne $true){ Copy-Item -Path $scriptloc -Destination "C:\scripts\build.ps1" -ErrorAction 'SilentlyContinue' }

Write-Host "Executing build script..."

& C:\Windows\System32\WindowsPowerShell\v1.0\powershell.exe -file "C:\scripts\build.ps1"The information it looks for in the nodes.xml file looks like this:

<hostname> <A01 role="XenAppProd" guid="22e35281-49c6-40f3-9fd7-ad7f8d69c84d" /> <A02 role="XenAppProd" guid="22e35281-49c6-40f3-9fd7-ad7f8d69c84d" /> <A03 role="XenAppProd" guid="22e35281-49c6-40f3-9fd7-ad7f8d69c84d" /> <A04 role="XenAppProd" guid="22e35281-49c6-40f3-9fd7-ad7f8d69c84d" /> <B01 role="XenAppProd" guid="22e35281-49c6-40f3-9fd7-ad7f8d69c84d" /> <B02 role="XenAppProd" guid="22e35281-49c6-40f3-9fd7-ad7f8d69c84d" /> <B03 role="XenAppProd" guid="22e35281-49c6-40f3-9fd7-ad7f8d69c84d" /> <B04 role="XenAppProd" guid="22e35281-49c6-40f3-9fd7-ad7f8d69c84d" /> </hostname>

I wont go any further into this as most of it has already been covered here before, the main gist of this is, my solution to this problem relies on the fact that I use the XML file to provision DSC on these machines.

There are a couple modifications I need to make to my DSC config to enable tracking, note the first item are only there so I can override the GUID from the CMDLine if I want. In reality you could just set the ValueData to ([GUID]::NewGUID()).ToString() and be fine.

The first bit of code take place before I start my Configuration block, the actual Registry resource is the very last resource in the Configuration block (less chance of false-positives due to an error mid-config).

param (

[string]$guid = ([GUID]::NewGuid()).ToString()

)

...

Registry verGUID {

Ensure = "Present"

Key = "HKLM:\SOFTWARE\PostBuild"

ValueName = "verGuid"

ValueData = $verGUID

ValueType = "String"

}From here we get to the important part:

[regex]$node = '(\[Registry\]verGUID[A-Za-z0-9\";\r\n\s=:\\ \-\.\{]*)'

[regex]$guid = '([a-z0-9\-]{36})'

$path = "\\dscserver\Configuration\"

$pkg = @()

$qa = @()

$prod = @()

$watch = @{}

$complete = @{}

[xml]$nodes = (Get-Content "\\dscserver\DSC\Nodes\nodes.xml")

# Find a list of machine names and role guids.

foreach($child in $nodes.hostname.ChildNodes) {

switch($child.Role)

{

"XenAppPKG" { $pkg += $child.Name;$pkgGuid = $child.guid }

"XenAppQA" { $qa += $child.Name;$qaGuid = $child.guid }

"XenAppProd" { $prod += $child.Name;$prodGuid = $child.guid }

}

}

# Convert DSC GUID's to latest verGUID.

$pkgGuid = $guid.Match(($node.Match((Get-Content -Path ($path+$pkgGuid+".mof")))).Captures.Value).Captures.Value

$qaGuid = $guid.Match(($node.Match((Get-Content -Path ($path+$qaGuid+".mof")))).Captures.Value).Captures.Value

$prodGuid = $guid.Match(($node.Match((Get-Content -Path ($path+$prodGuid+".mof")))).Captures.Value).Captures.Value

# See if credentials exist in this session.

if($creds -eq $null){ $creds = (Get-Credential) }

# Make an initial pass, determine configured/incomplete servers.

if($pkg.Count -gt 0 -and $pkgGuid.Length -eq 36) {

foreach($server in $pkg) {

$test = Invoke-Command -ComputerName $server -Credential $creds -ScriptBlock{ (Get-ItemProperty -Path "HKLM:\SOFTWARE\PostBuild" -Name verGUID -ErrorAction 'SilentlyContinue').verGUID }

if($test -ne $pkgGuid) {

Write-Host ("Server {0} does not appear to be configured, adding to watchlist." -f $server)

$watch[$server] = $pkgGuid

}else{

Write-Host ("Server {0} appears to be configured. Adding to completed list." -f $server)

$complete[$server] = $true

}

}

}else{

Write-Host "No Pkg server nodes found or no verGUID detected in Pkg config. Skipping."

}

if($qa.Count -gt 0 -and $qaGuid.Length -eq 36) {

foreach($server in $qa) {

$test = Invoke-Command -ComputerName $server -Credential $creds -ScriptBlock{ (Get-ItemProperty -Path "HKLM:\SOFTWARE\PostBuild" -Name verGUID -ErrorAction 'SilentlyContinue').verGUID }

if($test -ne $qaGuid) {

Write-Host ("Server {0} does not appear to be configured, adding to watchlist." -f $server)

$watch[$server] = $qaGuid

}else{

Write-Host ("Server {0} appears to be configured. Adding to completed list." -f $server)

$complete[$server] = $true

}

}

}else{

Write-Host "No QA server nodes found or no verGUID detected in QA config. Skipping."

}

if($prod.Count -gt 0 -and $prodGuid.Length -eq 36) {

foreach($server in $prod) {

$test = Invoke-Command -ComputerName $server -Credential $creds -ScriptBlock{ (Get-ItemProperty -Path "HKLM:\SOFTWARE\PostBuild" -Name verGUID -ErrorAction 'SilentlyContinue').verGUID }

if($test -ne $prodGuid) {

Write-Host ("Server {0} does not appear to be configured, adding to watchlist." -f $server)

$watch[$server] = $prodGuid

}else{

Write-Host ("Server {0} appears to be configured. Adding to completed list." -f $server)

$complete[$server] = $true

}

}

}else{

Write-Host "No Production server nodes found or no verGUID detected in Production config. Skipping."

}

# Pause for meatbag digestion.

Start-Sleep -Seconds 10

# Monitor incomplete servers until all servers return matching verGUID's.

if($watch.Count -gt 0){ $monitor = $true }else{ $monitor = $false }

while($monitor -ne $false) {

$monitor = $false

$cleaner = @()

foreach($server in $watch.Keys) {

$test = Invoke-Command -ComputerName $server -Credential $creds -ScriptBlock{ (Get-ItemProperty -Path "HKLM:\SOFTWARE\PostBuild" -Name verGUID -ErrorAction 'SilentlyContinue').verGUID }

if($test -eq $watch[$server]) {

$complete[$server] = $true

$cleaner += $server

}else{

$monitor = $true

}

}

foreach($item in $cleaner){ $watch.Remove($item) }

Clear-Host

Write-Host "mConfigured Servers:`r`n"$complete.Keys

Write-Host "`r`n`r`nmIncomplete Servers:`r`n"$watch.Keys

if($monitor -eq $true){ Start-Sleep -Seconds 10 }

}

Clear-Host

Write-Host "Configured Servers:`r`n"$complete.Keys

Write-Host "`r`n`r`nIncomplete Servers:`r`n"$watch.KeysEnd of the day is this a perfect solution? No. Bear in mind I just slapped this together to fill a void, things could be objectified, cleaned up, probably streamlined, but honestly a powershell script is not a good dashboard. I would also rather the servers themselves flag their progress in a centralized location rather than being pinged by a script.

But that is really something best implemented by the PowerShell devs, as anything 3rd party would, IMO, be rather ugly. So if all we have right now is ugly, I'll take ugly and fast.

As always, use at your own risk, I cannot imagine how you could eat a server with this script but don't go using it as some definitive health-metric. Just use it as a way to get a rough idea of the health of your latest configuration push.

PowerShell: DSC Sometimes Killing The Provider Isn't Enough...

A timely post considering the previous one. I've had a lot of problems with configurations just seemingly not taking affect. The only way I've seen to clear this up is by deleting the following files on the target machine:

"C:\Windows\System32\Configuration\Current.mof" "C:\Windows\System32\Configuration\Current.mof.checksum" "C:\Windows\System32\Configuration\DSCEngineCache.mof" "C:\Windows\System32\Configuration\backup.mof"

In my case I was syncing files and for the life of me could not get it to see the newest addition to the directory, I could delete older files/folders and it would replace them, but it patently refused to ever copy out the new one. Deleted these files, let DSC run, I could delete the new file/folder to my hearts content and it would always put it back down next time DSC passed.

To batch fix your servers (this assumes you have them all in an AD group, you could just create an array and pass it):

if($creds -eq $null){ $creds = Get-Credential }

foreach($member in (Get-ADGroupMember <groupname>)) {

$member.Name

Invoke-Command -ComputerName $member.Name -Credential $creds -Scriptblock{

$strPath = "C:\Windows\System32\Configuration\"

$arFiles = @("Current.mof","Current.mof.checksum","DSCEngineCache.mof","backup.mof")

foreach($item in $arFiles) {

Write-Host "Removing: $strPath$item"

Remove-Item -Path "$strPath$item" -Force

}

}

}

Write-Host "This is a test, and can be removed later."

return $falseBe warned that this should ONLY be done if you are having the problem accross the board, otherwise just invoke-command on the individual servers or, if only a portion are having problems just feed in an array. In my case EVERY server was failing on this File operation so killing it accross the board made sense. But it is still not something I would take lightly (batch deleting files never is).

PowerShell: DSC Debug

Looks like killing the WMI provider wont be neccesary much longer, based on this blog post.

PowerShell: DSC Example Configuration

I figured I would give a more practical and slightly more complex example config, which you can find here.

Anything in <> was stripped for security sake but the overall gist of it is there, zAppvImport is a custom DSC resource I wrote to ensure the contents of a path are imported onto a XenApp server, there are a couple weird things I had to account for in this build, namely the legacy apps and the permissions I need to set. These installer for one no longer exists so a file copy is the only way and the other one has a terrible old installer than hangs half the time so it gets the same treatment (it HATES Windows Installer for some reason which seams to corrupt the files no matter what so a file copy it is).

This is just an example of a custom App-V XenApp 6.5 server config (that isn't done) that goes from barebones to configured in these few, relatively simple, steps.

PowerShell: DSC, Step By Step

For ease of use here are the crucial bits about DSC, in more or less chronological order:

- Create DSC Pull Server

- Configure Client for DSC Pull

- Create Custom DSC Resource

- Setting It All In Motion

Good luck!

PowerShell: DSC, Custom Modules, Custom Resources, and Timing...

Hypothetical situation, you want to accomplish the following:

- Install the App-V 5.0 SP2 client.

- Configure the client.

- Restart the service.

- Import App-V sequences.

If that sounds simple to you, then you haven't tried it in DSC.

If fairness it isn't THAT complex, but it isn't very straight forward either, and there is little real reason for it to be complicated beyond a simple lack of foresight on the part of the DSC engine.

The first three tasks are very simple, a Package resource, a Registry resource, and a Script resource. But that last bit is tricky, and that is because it needs the modules installed in step 1 in order to work, but given that the DSC engine loads the ENTIRE script (which is normal for powershell, but given the nature of what the DSC engine does this is, IMO, a BIG hinderance) they aren't there when it first processes, so it pukes. You can tell this is your problem if you see a "Failed to delete current configuration." error (the config btw should at that point be visible right where WebDownloadManager left is, C:\Windows\Temp\<seriesofdigits>) as well as a complaint that the module at whatever location could not be imported because it does not exist.

So what is the solution?

Sadly kind of convoluted. First lets look at the config. Pretty simple, I call a custom resource that imports the App-V sequences and in that custom resource I have a snippet of code at the very top:

$modPath = "C:\Program Files\Microsoft Application Virtualization\Client\AppvClient"

if((Test-Path -Path $modPath) -eq $true){ Import-Module $modPath }else{ $bypass = $true }Now lets look at the script baked into the PVS image:

- Enable WinRM. Easier to do this than undo it in the VERY unlikely case we dont want it, DSC needs it so...

- Create a scripts directory. Not terribly important, you could just bake your script into this path.

- Find this hosts role/guid from an XML file stored on the network.

- Create LCM config.

- Apply LCM config.

- Copy modules from DSC Module share. This overlaps with the DSC config but the DSC config will run intermittently, not just once, for consistency.

- Shell out a start to the Consistency Engine.

This last bit is VERY important in two regards. The first is that if you just run powershell.exe with that command it WILL exit your script. The only way I've found to prevent this is to shell out so that it closes the shell, not your script. StartInfo.UseShellExecute is thus very important.

The second important bit is wiping out the WMI provider, without this it waited three minutes and ran again and promptly behaved like the $bypass was still being tripped, even though I could verify the module WAS in fact in place, I do not like this caching at all.

So the first time I run consistency I know it wont put everything in place, because it needs the client installed before the client modules exist and even with DependsOn=[Package]Install it still pukes, depends on doesn't seem to have any impact on how it loads in the resources.

I wait three minutes because I want to give the client time to install, I don't love this but this is just example code, in reality you would mainly be concerned with two things:

- Is the LCM still running.

- Is the client installed.

So I would probably watch event viewer and the client module folder before making my second run, timing out after ten minutes or so (in this case 15 minutes later the scheduled task will run it again anyway, don't want to get in the way).

Why bother with this? Mainly because I don't want to wait half an hour for my server to be functional. I run them initially back to back because I can either bake the GUID in, or use a script to "provision" that, while I'm there why rely on the scheduled task? This is on a Server 2008 R2 server so I can't use the Get-ScheduledTask cmdlet, and while yes I could bake in the Consistency task with a shorter trigger and change it in my DSC config...but that is just as much work and more moving parts.

I want to configure and make my initial pass as quickly as is safe to do so, and then allow it to poll for consistency thereafter.

PowerShell: Configure Client for DSC Pull

The final piece of the puzzle here is configuring a client to actually pull it's config from the server you created.

The thing I will note about the GUID you use is, what guid you use depends on how you intend to set up DSC.

There are many ways to skin this cat, you can use one GUID per role, say XenApp = 6311dc98-2c2a-4fbe-a8bc-e662da33148e and App-V = 6b5dac21-6181-400f-8c7a-0dd4bfd0926d, this allows you to keep the GUID count/management low, but also makes it difficult to target specific nodes (though how important that is, I leave up to you, for me, I want all my App-V servers to look alike and the same for my XenApp Servers).

You can generate a GUID on the fly like this:

[guid]::NewGuid().Guid

And then keep track of them in a database. Managing this is going to require more legwork though is you provision servers.

Ultimately, for now, I am using the one GUID per role method.

The second note here is that you do NOT want to use HTTP, if you can, PLEASE use SSL, this script does NOT use SSL, but it is harder to find info on setting it up without SSL than with so...remove the following snippet from DownloadManagerCustomData to enforce SSL:

;AllowUnsecureConnection = 'True'

Once you have this tweaked to your liking you can apply the configuration by running:

Set-DscLocalConfigurationManager -Path

PowerShell: Create DSC Pull Server

This is a slightly modified version of the script example MS posted ot the Gallery, this one a) works and b) installs EVERYTHING you need to build a DSC Pull server, not just hope it's there.

The custom resource: Can be found here.

The script: Can be found here.

I would advise extracting the files to C:\Windows\System32\WindowsPowershell\v1.0\Modules and making sure you right click->Properties->Unblock the .ps1 files.

Once you create configs they go here: C:\Program Files\WindowsPowerShell\DscService\Configuration

Remember, for Pull mode the node names MUST be GUID's and those GUID's MUST match the clients ConfigurationID, you can see the clients CID by typing:

Get-DscLocalConfigrationManager

PowerShell: DSC Quirks, Part 3

Importing module MSFT_xDSCWebService failed with error - File C:\Program Files\WindowsPowerShell\Modules\xPSDesiredStateConfiguration_1.1\xPSDesiredStateConfiguration_1.1\xPSDesiredStateConfiguration\DscResources\MSFT_xDSCWebService\MSFT_xDSCWebService.psm1 cannot be loaded because you opted not to run this software now.Importing module MSFT_xDSCWebService failed with error - File C:\Program Files\WindowsPowerShell\Modules\xPSDesiredStateConfiguration_1.1\xPSDesiredStateConfiguration_1.1\xPSDesiredStateConfiguration\DscResources\MSFT_xDSCWebService\MSFT_xDSCWebService.psm1 cannot be loaded because you opted not to run this software now.

Copy it to C:\Windows\System32\WindowsPowerShell\v1.0\Modules

At this point I would ignore MS and just always put your modules there.

PowerShell: DSC Quirks, Part 2

In this episode:

Why does my config seem to use an old resource version?

Why is WmiPrvSE using up SO much memory?

The solution to both of these is actually the same:

gps wmi* | ?{ $_.Modules.ModuleName -like "*DSC*" } | Stop-Process -Confirm:$false -ForceThis kills the WMI provider for DSC and forces it to launch a new one. As to why, well in the first instance I imagine the engine caches the script, and I bet there is a timeout before it will pick it up again. In the second case...it's WMI, either your script or the machine or just the alignment of the planets made it all emo, kill it and move on.

This command, btw, can absolutely be run against multiple machines remotely via WinRM. Not only that but it can be monitored remotely as well. So if you have a problem, script out a monitor/resolver, it seems fairly sturdy in the sense that if you kill it mid-action it just bombs that run, next time the DSC task runs it fires up a new instance and goes on about it's business. If the problem is chronic, I would review your code...

PowerShell: DSC Quirks, Part 1

Just going to catalog a few of the things I've seen crop up from time to time with DSC, starting with:

- Cannot Import-Module

- Cannot import custom DSC resource (even though Get-DscResource says it is there)

For that first one the solution is usually pretty simple, the AppvClient module is case in point. Instead of this:

Import-Module AppvClient

Do this:

Import-Module "C:\Program Files\Microsoft Application Virtualization\Client\AppvClient"

You can find the exact location by typing:

(Get-Module AppvClient).Path

The folder, and this is important, the FOLDER the .ps1d is in should be provided to Import-Module, it will do the rest, don't try to point it right at the .ps1d or anything else for that matter.

The second problem I have only seen on Server 2008 R2 with WMF 4.0 installed. What you will no doubt quickly learn is that you should put your DSC modules here:

C:\Program Files\WindowsPowershell\Modules

Or worst case if x86, here:

C:\Program Files (x86)\WindowsPowershell\Modules

And if you put them there and type:

Get-DscResource they will indeed show up, run a config using them however and it will error out saying they couldn't be found. Unless you put there here:

C:\Windows\System32\WindowsPowerShell\v1.0\Modules

Why does it do this? I don't know, I suspect it is because WMF 4.0 is rather tacked on by now when it comes to Server 2008 R2, frankly if this is the price I have to pay for being able to use this on XenApp 6.5 servers, so be it!

PowerShell: DSC Custom Resource

IMO the DSC information out there right now is kind of all over the place, and worse some of it is outdated, so I'm going to collect a few guides here, starting a bit out of order with a guide on how to write your own custom DSC resource. Later I'll put one together for configuring a DSC Pull server and then one on how to configure a client for DSC Pull operations.

First things first, you need this: xDscResourceDesigner

We wont be using any of the other functionality of it, but it at the very least is handy for creating the initial structure. Extract it like it says but most likely depending on your setup you are going to need to teomporarily run with an Unrestricted ExecutionPolicy. I couldn't unflag it to save my life and frankly didn't spend much time on it, remember to set your policy back after using it and there are no worries.

Anyway, the first thing you need to know is, what do you need to know? We are going to create something really simple here, a DSC Resource to make sure a file exists and set its contents. So we need a Path and Contents, then we need the mandatory Ensure variable, so our first bit of code is going to look like this:

What is essentially going on here is we are setting up our variables and then building the folder structure and MOF for our custom resource. Saving it all to our PSModules path so once you run this command you should be able to Get-DscResource and see zTest at the bottom of the list.

Now we have a script to modify, this script is located in the DSCResources sub-folder in the path we just specified. There are a couple things to know about this:

The Test Phase

This is where LCM looks to validate the state of the asset you are trying to configure. In our case, does this file exist? And what are its contents? So the content of your Test-TargetResource script will probably look a little something like:

if((Test-Path $Path) -eq $true){ if((Get-Content $Path) -eq $Content){ $return = $true }else{ $return = $false }else{ $return = $false }That is pretty messy and all one line but you get the idea, there is no logic built in, you need to provide all the logic. Whether that be ensuring that you don't just assume that because the file exists it must have the right contents, or the logic to ensure that if $Ensure is set to "Absent" that you are removing the file thoroughly, handling errors, not outputting to host, etc. etc.

So assuming you do not have the file already and we run this resource, it will run through the Test-TargetResource and return $false, at which point if you set Ensure="Absent" it will be done, if you set it to "Present" it will move on to Set-TargetResource. Which will probably look something like this:

if($Ensure -eq "Present") {

New-Item -Path $Path -ItemType File -Force

Set-Content -Path $Path -Value $Content

}elseif($Ensure -eq "Absent") {

Remove-Item -Path $Path -Force -Confirm:$false

}Again, this is quick and dirty and not meant to represent the most robust function possible for creating/populating a file or deleting it thoroughly. Liberal use of try/catch and thinking about ways it could go wrong (including doing a little of your own validation to make sure what you just did took hold) are recommended.

The Test function MUST return $true or $false, Set does not need to (should not?) return anything at all, which leaves: Get-TargetResource

For now I'm not going to go too far into this, I'll just say that in reality this appears to be an informational function that allows you to pull information about the asset in question without actually doing anything. It MUST return a hastable of information about the object (usually the same information, sans Ensure, that you provide to the script). i.e.

$returnVal = @{

Path = $Path

Content = (Get-Content -Path $Path)

Ensure = $Ensure

}Again how strictly this is enforced and how much is really needed...in this example I would almost say not at all. You could just as easily replace $Path with (Test-Path -Path $Path) and drop Ensure entirely I think. I haven't tested the boundaries of how strictly you have to adhere to input->outputin this case.

Once all of this is done you should be able to do the following and get a usable mof ready to be executed:

Configuration Test {

Import-DscResource -Module zTest

Node "localhost" {

zTest Testing {

Ensure = "Present"

Path = "C:\test.txt"

Content = "Hello (automated) World!"

}

}

}

$output = "C:\\"

Test -OutputPath $output

Start-DscConfiguration -Verbose -Wait -Force -Path $output Please note that the OutputPath argument isn't required, without it the script will just spit out a mof in, well in this case it will create a folder called Test in whatever folder you are currently in and put it in there. I like to specify it so I know where my mof's are. Once you call it with that final command you should see LCM spit out a bunch of information regarding the process and any errors will show up there as well as in the Event Viewer.

Please note that a well written DSC Resource should be able to be run a million times and only touch something if it isn't in compliance. Meaning that if the script doesn't detect the file is missing or the contents are different from what they should be, it should effectively do nothing at all. It shouldn't set it anyway just to be sure, it shouldn't assume, it shouldn't cut corners, or hope and pray. The entire point here is to ensure consistent state accross an environment. The better your script is, the more reliable, and resilient, forward thinking and robust, the better your resource will be.

In the case of this Resource, if I planned on taking it farther I would probably make sure I had rights to the file before messing with it, include a try/catch around file creation and content placement, I would check the file existed once more with a fresh piece of memory (if you end up setting the content to a variable) and validate the contents afterwards as well. You could build in the option to allow forcing a specific encoding type to convert between UTF-32 and UTF-8 or ASCII if you wanted, provide options for Base64 input encoding (in case you wanted to handle script strings for instance, or you could make sure to escape and trim as needed) etc. etc.

The point being, it is easy to write something quick that will rather blindly carry out an action. But in the long run you need something that is going to be feature rich and robust to match.

And don't forget, if you don't copy the folder structure to the client machines, they wont have access to your Resource. The path we created this in on our workstation is the same path it needs to live on every other machine, and once it does, they too will be able to use the Resource.

App-V 5: Collect icons for XenApp 6 publishing.

Quick script you can run on a machine that will look at any app-v app cached on that machine and collect the icon file for it and spit it out into a structured directory.

Click here for the script.

Connect Network Printer via WMI

Very simple example, this can be run from any powershell ci:

([wmiclass]"Win32_Printer").AddPrinterConnection("\\\") ex: <server> = printsrv01, <port> = R1414

The specific path should be fairly obvious if you browse to the printer server hosting the printer in question, it should show up as a device and you can just concatenate that onto the end and pipe it to WMI, simple.

PowerShell: Random Password Generator.

Quick little script to generate a password meeting simple complexity (guaranteed one upper and one number at random positions in the string) guidelines.

$upper = 65..90 | ForEach-Object{ [char]$_ }

$lower = 97..122 | ForEach-Object{ [char]$_ }

$number = 48..57 | ForEach-Object{ [char]$_ }

$total += $upper

$total += $number

$total += $lower

$rand = ""

$i = 0

while($i -lt 10){ $rand += $total | Get-Random; $i++ }

"Initial: "+$rand

$rand = $rand.ToCharArray()

$num = 1..$rand.Count | Get-Random

$up = $num

while($up -eq $num){ $up = 1..$rand.Count | Get-Random }

"num "+$num

"up "+$up

$rand[($num-1)] = $number | Get-Random

$rand[($up-1)] = $upper | Get-Random

$rand = $rand -join ""

"Final: "+$randPowerShell: Get Remote Desktop Services User Profile Path from AD.

Bit goofy this, when trying to get profile path information for a user you may think Get-ADUser will provide you anything you would likely see in the dialog in AD Users and Computers, but you would be wrong. Get-ADUser -Identity <username> -Properties * does not yield a 'terminalservicesprofilepath' attribute.

Instead you must do the following:

([ADSI]"LDAP://").terminalservicesprofilepath

You can use Get-ADUser to retrieve the DN for a user or any other method you prefer.

Citrix: Presentation Server 4.5, list applications and groups.

Quick script to connect to a 4.5 farm and pull a list of applications and associate them to the groups that control access to them. You will need to do a few things before this works:

If you are running this remotely you need to be in the "Distributed COM Users" group (Server 2k3) and will need to setup DCOM for impersonation (you can do this by running "Component Services" drilling down to the "local computer", right click and choose properties, clicking General properties and the third option should be set to Impersonate).

Finally you will need View rights to the Farm. If you are doing this remotely there is a VERY strong chance of failure is the account you are LOGGED IN AS is not a "View Only" or higher admin in Citrix. RunAs seems to be incredibly hit or miss, mostly miss.

$start = Get-Date $farm = New-Object -ComObject MetaFrameCOM.MetaFrameFarm $farm.Initialize(1) $apps = New-Object 'object[,]' $farm.Applications.Count,2 $row = 0 [regex]$reg = "(?[^/]*)$" foreach($i in $farm.Applications) { $i.LoadData($true) [string]$groups = "" $clean = $reg.Match($i.DistinguishedName).Captures.Value $apps[$row,0] = $clean foreach($j in $i.Groups) { if($groups.Length -lt 1){ $groups = $j.GroupName }else{ $groups = $groups+","+$j.GroupName } } $apps[$row,1] = $groups $row++ } $excel = New-Object -ComObject Excel.Application $excel.Visible = $true $excel.DisplayAlerts = $false $workbook = $excel.Workbooks.Add() $sheet = $workbook.Worksheets.Item(1) $sheet.Name = "Dashboard" $range = $sheet.Range("A1",("B"+$farm.Applications.Count)) $range.Value2 = $apps $(New-TimeSpan -Start $start -End (Get-Date)).TotalMinutes